Add Kinect azure compression options.

https://microsoft.github.io/Azure-Kinect-Sensor-SDK/master/group___enumerations_gabd9688eb20d5cb878fd22d36de882ddb.html

Is it just the MJPG option you’re looking for, or is the chroma/luminance options as well?

Have you heard any benefits of these? We are currently using the BGRA32 format since it is the default and I wasn’t sure that it would be any faster for us to do the decompression/conversion than to just have the API do it.

Well,

We not always want JPEG compression which lose more data than NV12.

additional for that, with NV12 we can use the luma as monochrome for different algorithm

( like stereo matching ) for ~half the bandwidth.

Question :

When getting stream from the kinect,

is it possible to acquire only rgb or only IR \ depth, sound ? and save bandwidth ?

or

it force you to pass all frame data

(IR + RGB + sound, what ever the device output.)

(Sometime we just want to use only IR\depth or only RGB as regular camera)

Thanks for the additional information.

The documentation doesn’t give a lot of detail, but I believe right now that the camera is sending the image in one of its native formats (probably nv12) and then the local machine is doing the conversion to RGB before handing it over to TouchDesigner. We could get TD to do the conversion itself, but I’m not sure that it would save any bandwidth if that is the primary concern.

As far as the other data streams, the camera only sends the data that is requested. So, if the Image parameter in the TOP is set to Color, then the depth camera is disabled and only the color image is sent. Likewise, if the Image is set to Depth or IR, then the color camera is disabled and only the depth image is sent.

Enabling the ‘Align Image to Other Camera’ option will make both cameras active even if you’re only capturing the data from one of them.

The device’s non-image streams work in a similar way, so that if you’re requesting something that requires body tracking data e.g. Player Index or CHOP data then it will request body tracking data from the device. And if you enable the IMU channels in the CHOP then it will automatically enable the IMU data stream. Otherwise, both of those streams are disabled.

I hope that answers some of your questions. We can still look into supporting the other color formats, but because of the way graphics cards and the TOPs work we’ll generally end up converting them back to RGB anyways.

Rob thanks a lot for the quick reply.

As far as i understand ,

Azure kinect rgb rolling shutter sensor

my guess for the flow of the kinect azure device is ( as far we concern (rgb) ) :

after debayer and ISP → RGB ( native ) → FPGA ( YUV\MJPEG) or leave it as RGB > thru USB 3.0 → system.

(assume it sends 8 bit per channel)

RGB as byte size : 3 * h * w .

NV12 as byte size : 1.5 * h * w.

once the NV12 gets to the gpu as monochrome texture.

you pass it thru the YUV420 to RGB fragment shader.

- We save half the bandwidth and the conversion isn’t expensive.

- We get full luma values !

- this pipeline isnt only kinect azure related and can be used for general cases.

same idea for Mjpeg with its own cons and pros

and not sure gpu offer any decompression for it .

maybe its all handled with the SDK ?

i`m not too familiar .

The Sensor SDK can provide color images in the BGRA pixel format. This is not a native mode supported by the device and causes additional CPU load when used. The host CPU is used to convert from MJPEG images received from the device.

Placed question

I had forgotten about that Hardware spec page, it has a lot of good details on it.

If I’m reading it correctly though, RGB is never sent across the wire. It sounds like in most cases the camera sends the signal over USB as a MJPEG and only decompresses it to RGB locally on the host machine…

The Sensor SDK can provide color images in the BGRA pixel format. This is not a native mode supported by the device and causes additional CPU load when used. The host CPU is used to convert from MJPEG images received from the device.

Based on the spec sheet, it appears that only 1280x720 supports uncompressed video as either YUY2 or NV12. However, I’m assuming that would actually be higher bandwidth than the usual MJPEG transmission (although you would theoretically get a cleaner signal).

It should be possible to add support for the uncompressed formats and do the YUV to RGB conversion ourselves in the shader as you mentioned (we already do it for various image/movie formats). I tried a quick experiment to hardcode the format to NV12 and it appeared like it was still giving me RGB, so I couldn’t get a good comparison of the difference. I will take a look into it further.

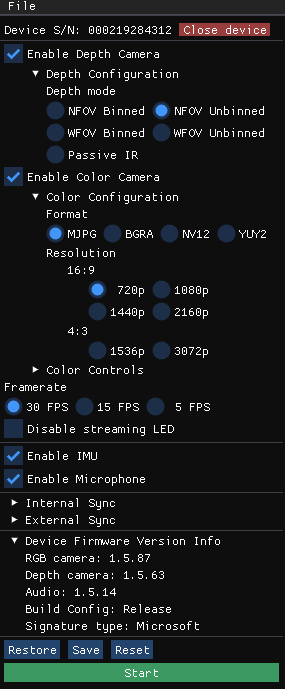

You can also check the kinect viewer

as it seem you can ouput all 4 color configurations contradict the table you mentioned.

what im missing :) im bit confused:thinking: .

If you click on NV12 or YUY2 though, it disables all of the other resolutions except for 720p and if you have it in 1080p and switch it to NV12 it will change the resolution back to 720p (at least that’s how it works on my version).

i guess i came too quick to conclusion.

about the viewer (not infront my work desk now).

thanks foe the quick replies.

No problem. I hadn’t dug into these features of the camera very much yet so the research has been interesting. We’re still actively working on the Kinect support for TD, so let us know if there’s anything else we can do to improve the experience.